Small Language Models (SLMs) are AI models capable of processing, understanding and generating natural language content. As their name implies, SLMs are smaller in scale and scope than Large Language Models (LLMs).

"Bigger is always better" — this principle is deeply rooted in the AI world. The race to create larger models is in full swing; each month brings reports of even larger models featuring more parameters than their predecessors.

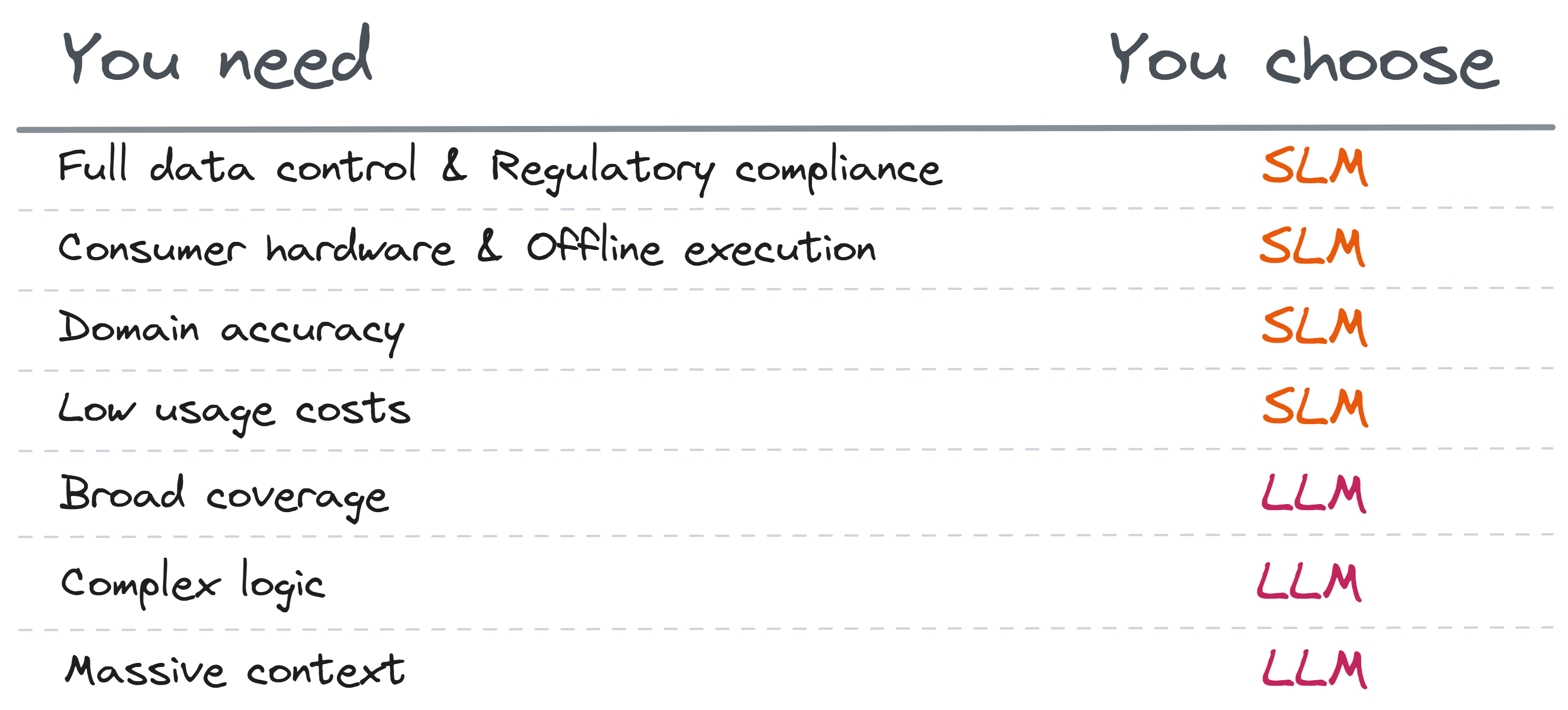

So, is bigger really better? Or could smaller models be the key to unlocking the next wave of AI innovation?

TL;DR

SLMs are lightweight AI models designed to run locally on devices like phones and laptops instead of in the cloud. Unlike LLMs, SLMs prioritize efficiency - they use less energy, respond faster, work offline, and keep data private.

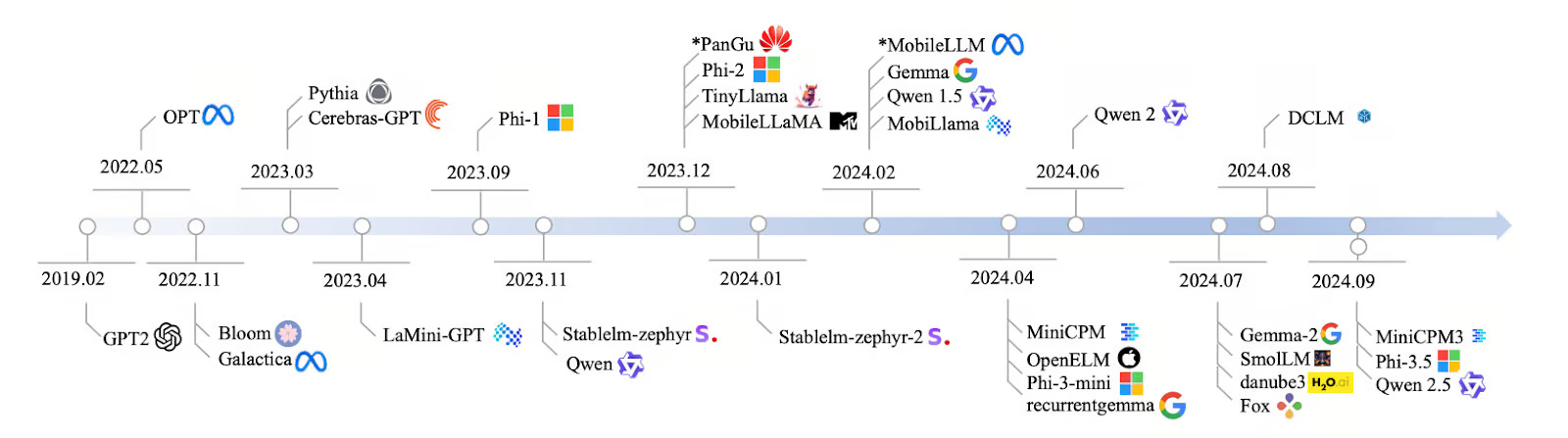

Examples include Google's Gemma, Microsoft's Phi, and Apple's OpenELM.

While the AI industry focuses on building bigger models, SLMs prove that "smaller and smarter" can be more practical for everyday use, offering privacy, cost savings, and the ability to run AI directly on your personal devices.

What Are Small Language Models?

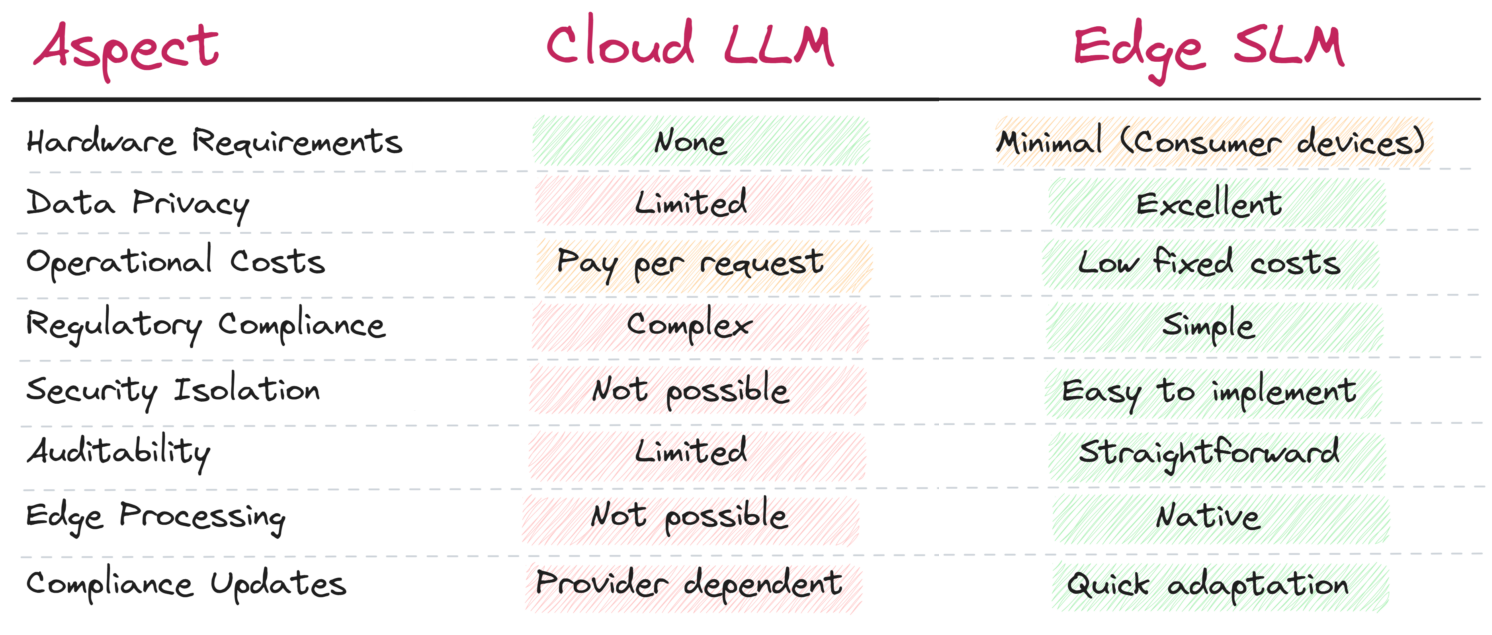

SLMs are lightweight versions of LLMs that are specifically trained or distilled to operate efficiently with limited memory and compute resources, typically on devices with CPUs, mobile chipsets, or small GPUs. Unlike LLMs, SLMs are designed to:

- Run locally on edge devices (phones, laptops, microcontrollers)

- Respond in real time with low latency

- Use significantly less energy and bandwidth

- Respect data privacy by avoiding cloud calls

This makes them ideal for use cases where cloud connectivity is intermittent, latency is critical, or data must stay on the device for security or regulatory reasons.

Advantages of Small Language Models

SLMs offer several key advantages, primarily due to their reduced size and computational requirements:

Lightweight and Efficient - SLMs, with their fast processes and reduced computing needs, take up less space and impose lower energy consumption. This makes them ideal for everyday tasks without relying on external cloud infrastructure or the internet.

Accessibility - SLMs have eliminated the need for large cloud storage for deployment. They can be embedded directly within devices, making them highly accessible for use in smaller devices such as mobile phones and tablets.

Task-Specific Customization - The ability to train and fine-tune SLMs is a feature developers are leveraging. This versatility means SLMs can be customized to address specific needs, such as customer support in specialized fields like healthcare and finance, where precise and technical data is critical.

Cost-Effectiveness - Compared to LLMs, SLMs require less infrastructure and fewer resources for their operations. This makes them a viable and cost-effective option.

Privacy and Security - Deploying SLMs within devices ensures data processing is done locally, avoiding the transfer of information to external sources. This capability makes SLMs particularly valuable for industries where privacy and security are critical, such as healthcare and finance.

Examples of Small Language Models

Here are some examples of popular SLMs:

Google's Gemma: Gemma is a family of lightweight, state-of-the art open models built from the same research and technology used to create the Gemini models. Gemma models run across popular device types, including laptop, desktop, IoT, mobile and cloud, enabling broadly accessible AI capabilities.

Microsoft's Phi: Phi is the Microsoft family of SLMs. These models were created to give developers tools to implement AI directly on a device without the need for cloud connectivity. Phi models are open source through the MIT License.

Apple's OpenELM: OpenELM is a family of open-source language models designed for efficient on-device processing. OpenELM models are intended to run efficiently on local hardware of consumer devices such as iPhones and Macs.

Final Thoughts

The AI landscape isn't just about going bigger, it's about going smarter, and sometimes smaller. SLMs are proving that powerful intelligence doesn't have to live in a massive data center. It can live right on your phone, your computer, or your enterprise app.

For people seeking privacy, cost savings, speed, and control, SLMs offer a practical, scalable path to embedding AI deeply and responsibly into everyday workflows.

The future of AI isn't just in the cloud, it's in your pocket and on your desk.