The AI landscape is undergoing a fundamental shift. What once required massive cloud infrastructure and internet connectivity is now becoming possible on our personal devices. From smartphones running sophisticated language models to laptops processing complex AI tasks without ever connecting to the cloud, on-device AI is no longer a futuristic concept—it's happening right now.

TL;DR

Cloud AI (also known as online AI) like ChatGPT introduces problems such as:

Latency: You're waiting seconds for basic completions.

Privacy: Your input goes to a remote server.

Reliability: API keys, rate limits, outages.

Cost: $0.06 per 1,000 tokens is not that cheap.

That's where on-device AI (also known as local AI or offline AI) comes in. From improved data protection and lower latency to cost efficiency and better customizability, the reasons for using offline AI are many.

In 2025, you can easily run compact models (under 4GB) on your laptop without a GPU. Models like Phi, Gemma, LLaVA, and DeepSeek, among others. And they aren't toys. They can write, summarize, classify, translate, code, and more.

The Privacy Revolution

Perhaps the most compelling argument for on-device AI is privacy. When your data never leaves your device, you maintain complete control over your information. This shift addresses growing concerns about data breaches, surveillance, and the monetization of personal information by tech giants.

Consider the implications: your personal conversations with AI assistants, sensitive documents being analyzed, and private photos being processed—all happening locally without any third party ever seeing your data. This level of privacy was unimaginable just a few years ago.

Consider the implications: your personal conversations with AI assistants, sensitive documents being analyzed, and private photos being processed—all happening locally without any third party ever seeing your data. This level of privacy was unimaginable just a few years ago.

Sam Altman just warned that there's no legal confidentiality when using ChatGPT as a therapist. So basically, he is telling users that their conversations with ChatGPT aren't truly private and that these chats lack legal protections.

Speed and Reliability

On-device AI eliminates the latency of cloud communication. No more waiting for servers to respond or dealing with connection timeouts. AI responses are instantaneous, creating a more natural and fluid user experience. This speed advantage becomes even more pronounced in areas with poor internet connectivity.

Reduced Cloud Costs

Every cloud API call to an AI service costs money, whether via usage fees or maintaining servers. By running AI locally, you eliminate per-request costs and can scale to more users without a proportional increase in cloud expense. For startups or apps with thin margins, this is a huge win. It also means you're not at the mercy of cloud rate limits or sudden pricing changes.

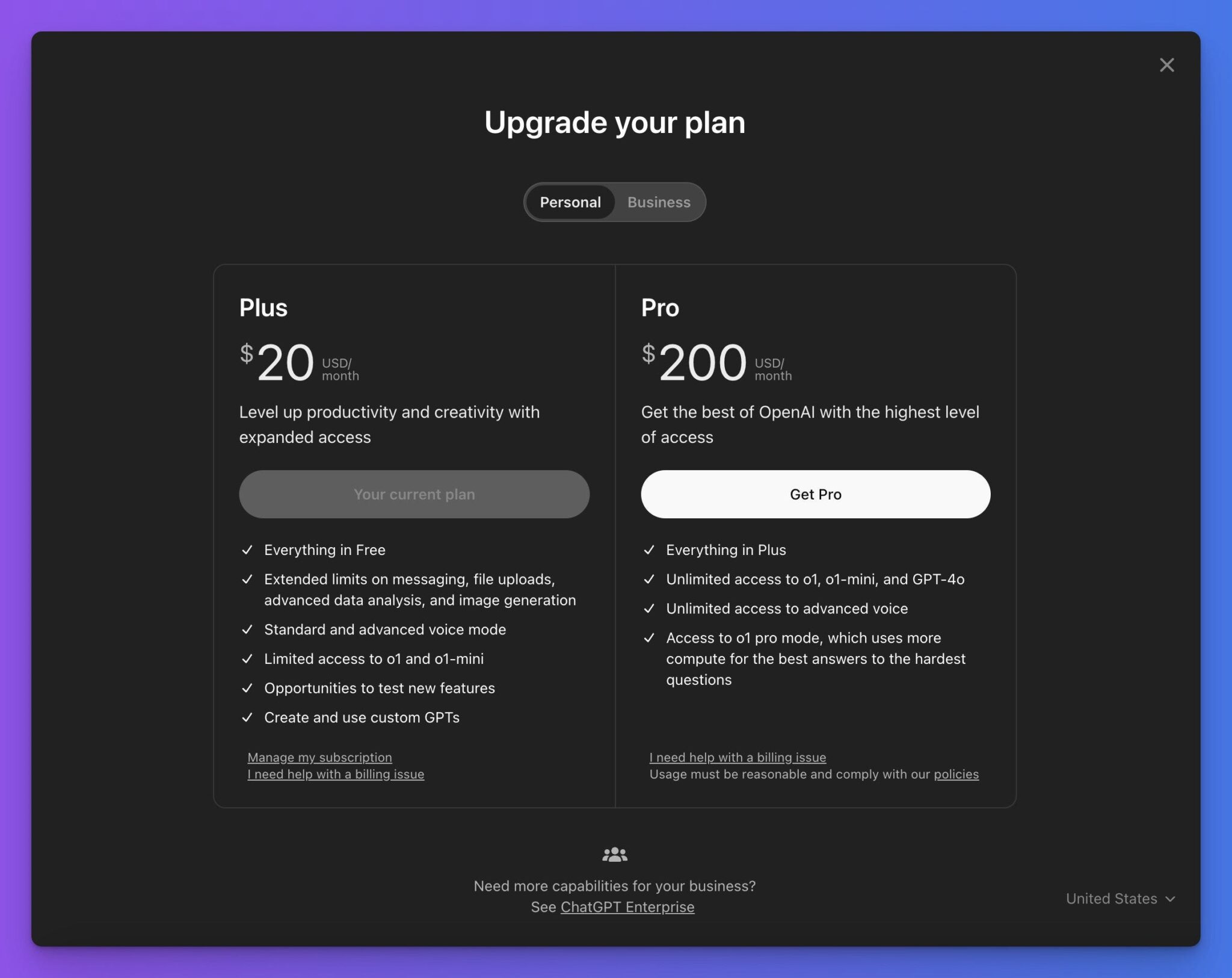

Yes, cloud AI is impressive, but it is also very expensive to maintain. You may have already noticed how subscription prices for cloud-based AI applications have risen? For example, OpenAI's recent announcement of a $200/month Pro plan is a signal that costs are rising. And it's likely that competitors will also move up to these price levels.

New Use Cases

Because on-device AI is available anytime and respects privacy, it opens up scenarios that were hard to do with cloud AI. For example, a health app can analyze sensor data or images on-device without worrying about transmitting sensitive health data; a camera app can do live scene understanding without requiring connectivity, etc.

The Technical Challenges

Despite the advantages, on-device AI faces significant hurdles. Modern AI models are resource-intensive, requiring substantial processing power and memory. While hardware continues to improve, running state-of-the-art models locally still demands powerful devices and can impact battery life.

Model optimization techniques like quantization and pruning are helping to compress AI models without significant performance loss, but there's still a trade-off between model capability and device constraints.

Industry Adoption

Major tech companies are investing heavily in on-device AI. Apple's Neural Engine, Google's Tensor chips, and specialized AI processors from various manufacturers are making on-device AI more accessible and efficient. This hardware evolution is crucial for widespread adoption.

Microsoft, Google, and Apple have all released Small Language Models (SLMs). Microsoft's Phi, Google's Gemma, and Apple's OpenELM are designed for on-device processing, offering high performance, memory efficiency, and enhanced user privacy by eliminating reliance on cloud systems.

Enterprise applications are particularly excited about on-device AI for handling sensitive data in regulated industries like healthcare and finance, where data privacy requirements make cloud-based solutions challenging.

Looking Forward

Are we ready to go all-in on on-device AI? The answer depends on your priorities and use cases. For privacy-conscious users and applications requiring guaranteed availability, on-device AI is already compelling. For others, the convenience and power of cloud AI may still outweigh the benefits of local processing.

What's certain is that on-device AI will continue to improve rapidly. As hardware becomes more powerful and models become more efficient, the choice between cloud and on-device AI will increasingly favor local processing for many applications.

The future of AI is likely hybrid—combining the privacy and reliability of on-device processing with the scale and capabilities of cloud computing when needed. But for 2025 and beyond, on-device AI is positioned to become the preferred choice for users who value privacy, speed, and reliability above all else.