As AI continues to advance, the ability to run powerful models like Qwen3 entirely on local devices is becoming more attractive. This shift away from cloud-based solutions offers significant advantages, including enhanced privacy, cost-effectiveness, and the ability to work offline. Local AI systems allow developers and enthusiasts to experiment without the burden of API fees or data privacy concerns. This article's gonna take you on a journey to set up your own local AI environment.

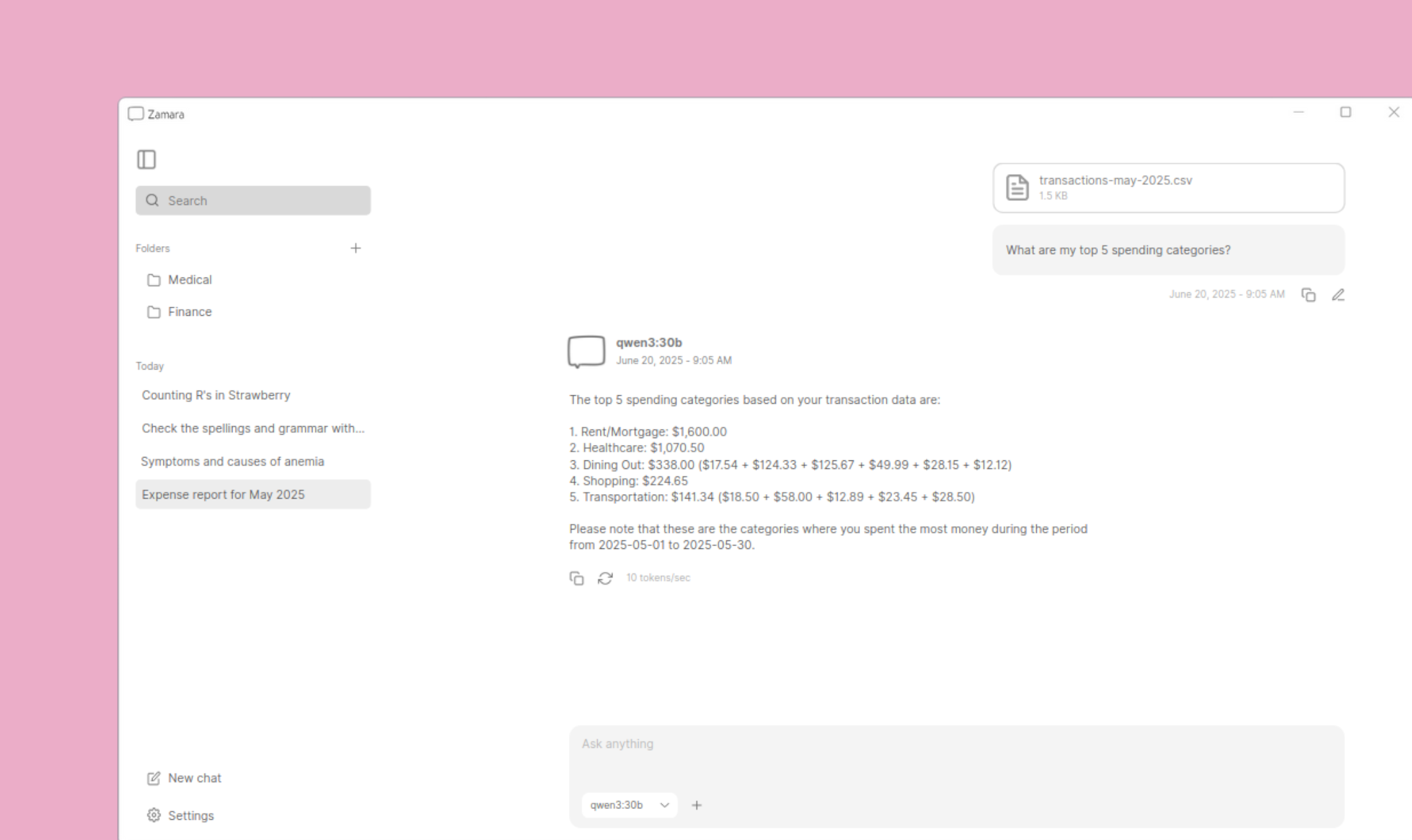

One thing to keep in mind is that, when it comes to performance, Ollama is not the best option. You can achieve up to 30% more tokens per second with Zamara.

Steps

Here are the steps we're gonna take to run Qwen3 locally with Ollama:

1. Download Ollama on Windows

2. Get the download link for Qwen3 from Hugging Face

3. Download Qwen3 with Internet Download Manager

4. Make the Modelfile with Notepad

5. Make Qwen3 loadable with Ollama

6. Use Qwen3 in Ollama

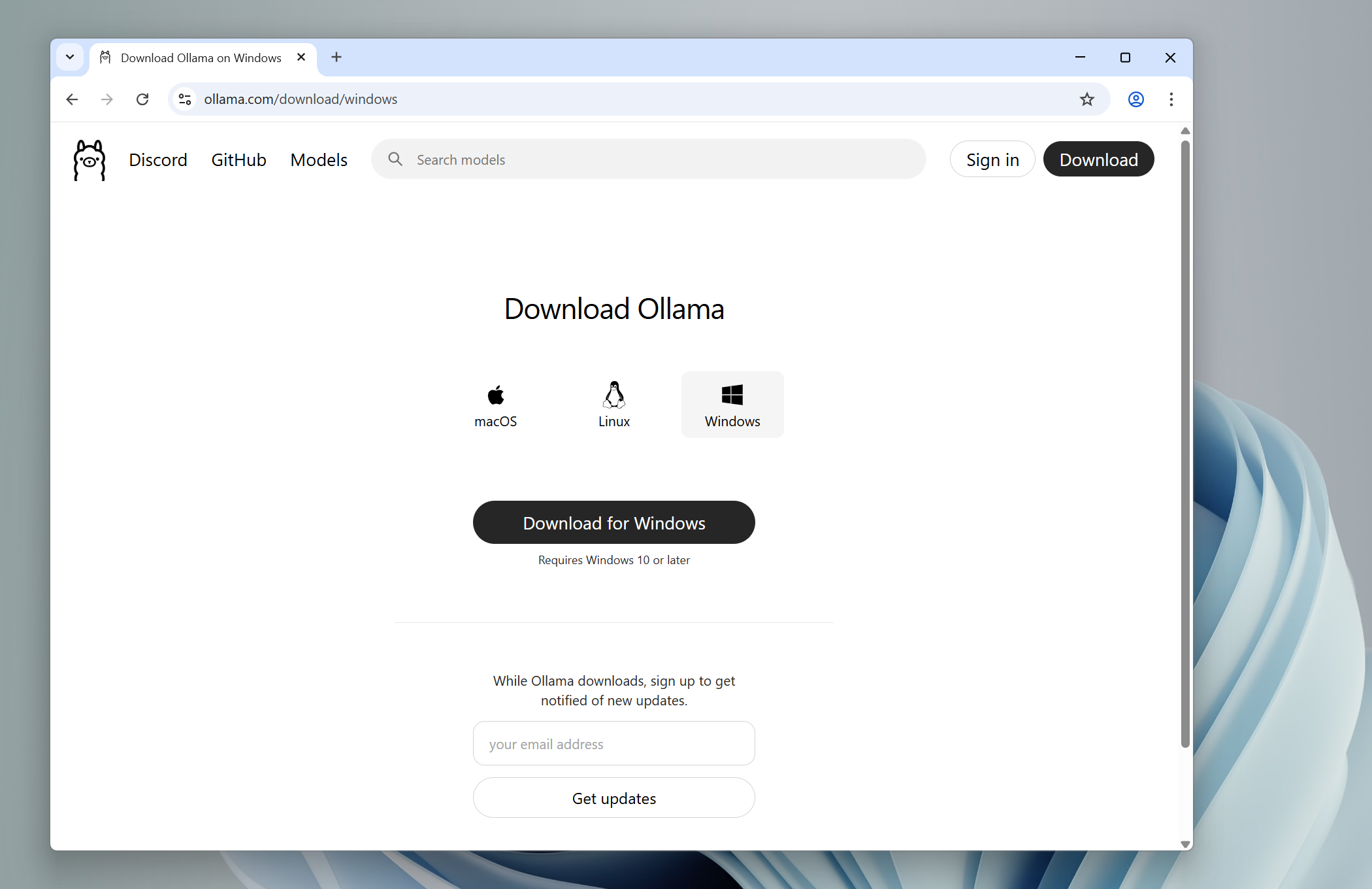

1. Download Ollama on Windows

Ollama's new app is now available for Windows. Let's start by going to the Ollama website and downloading the program. Make sure to get the Windows version.

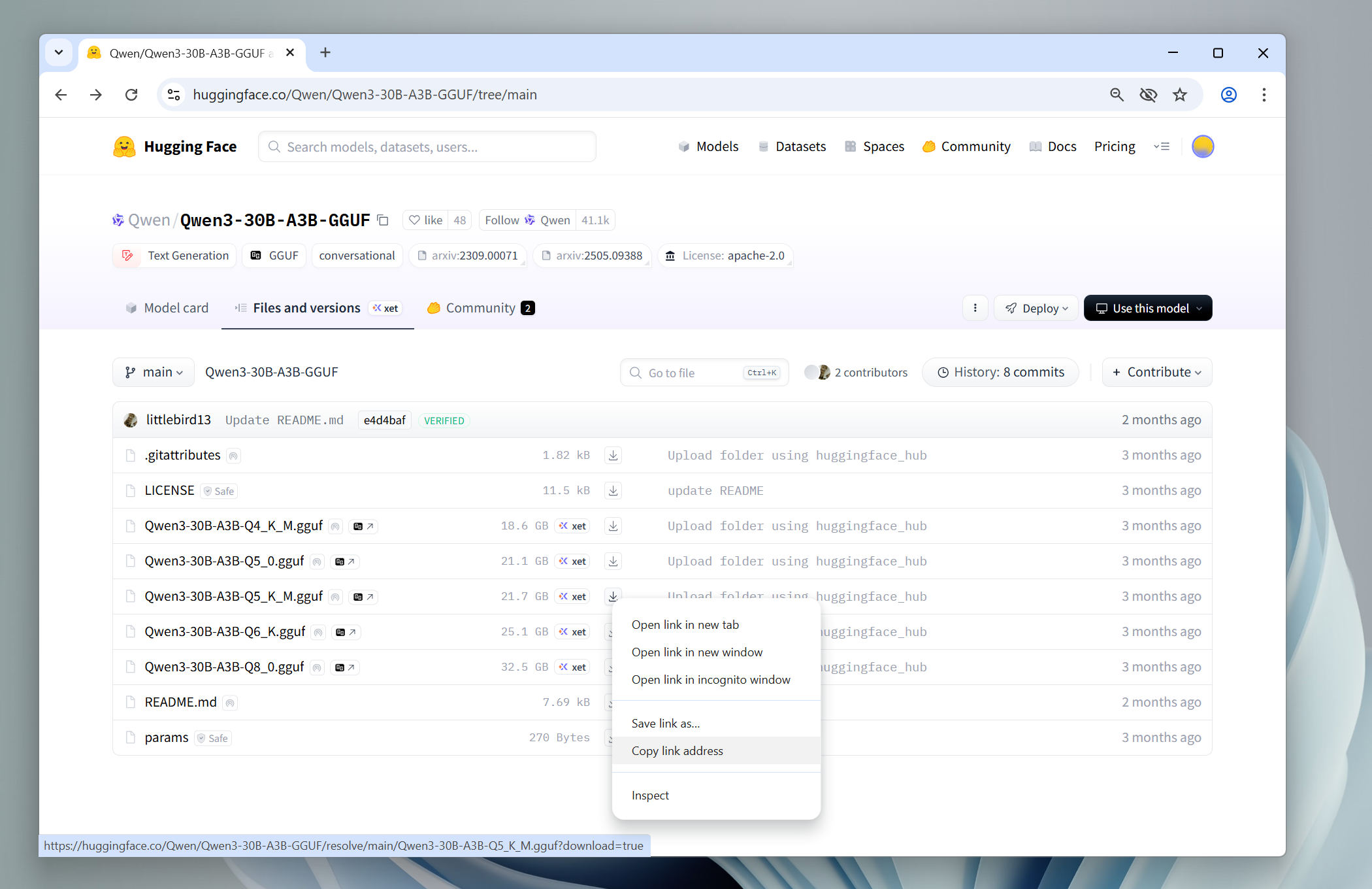

2. Get the download link for Qwen3 from Hugging Face

You can directly pull the models you like from Hugging Face, and that’s exactly what we're going to do. Let's go to the Hugging Face website and download Qwen3. In this case, we'll go for Qwen3 30B (Q5_K_M). This is the link to all the files and versions:

https://huggingface.co/Qwen/Qwen3-30B-A3B-GGUF/tree/main

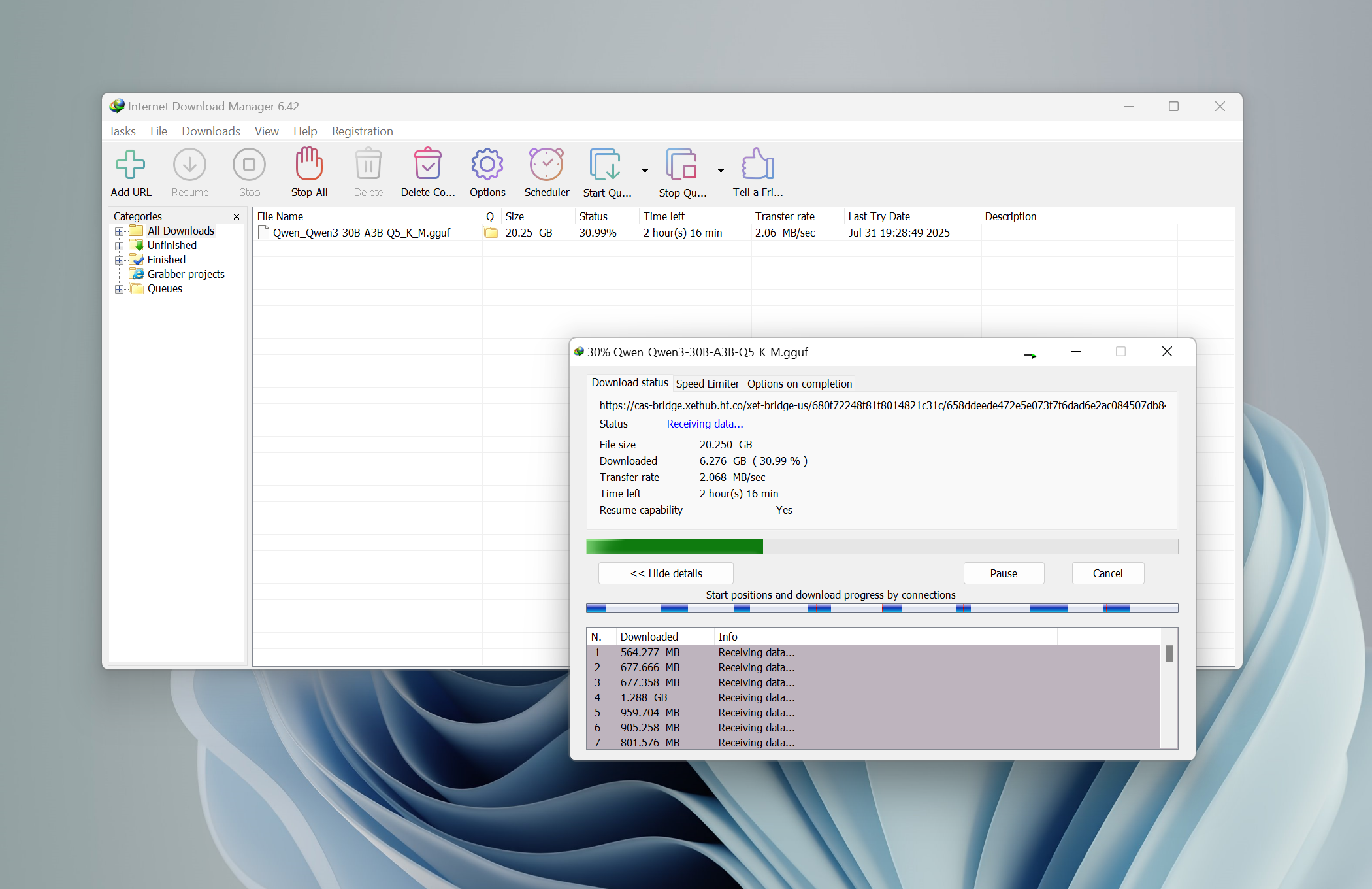

3. Download Qwen3 with Internet Download Manager

Now we'll use the link to download the model using Internet Download Manager (IDM). Why aren't we downloading the model with Ollama? Because it's very slow! Why not use Chrome? Because it keeps breaking! IDM increases download speeds by up to 10 times and allows you to resume or schedule downloads. So, go to the Internet Download Manager website and download it.

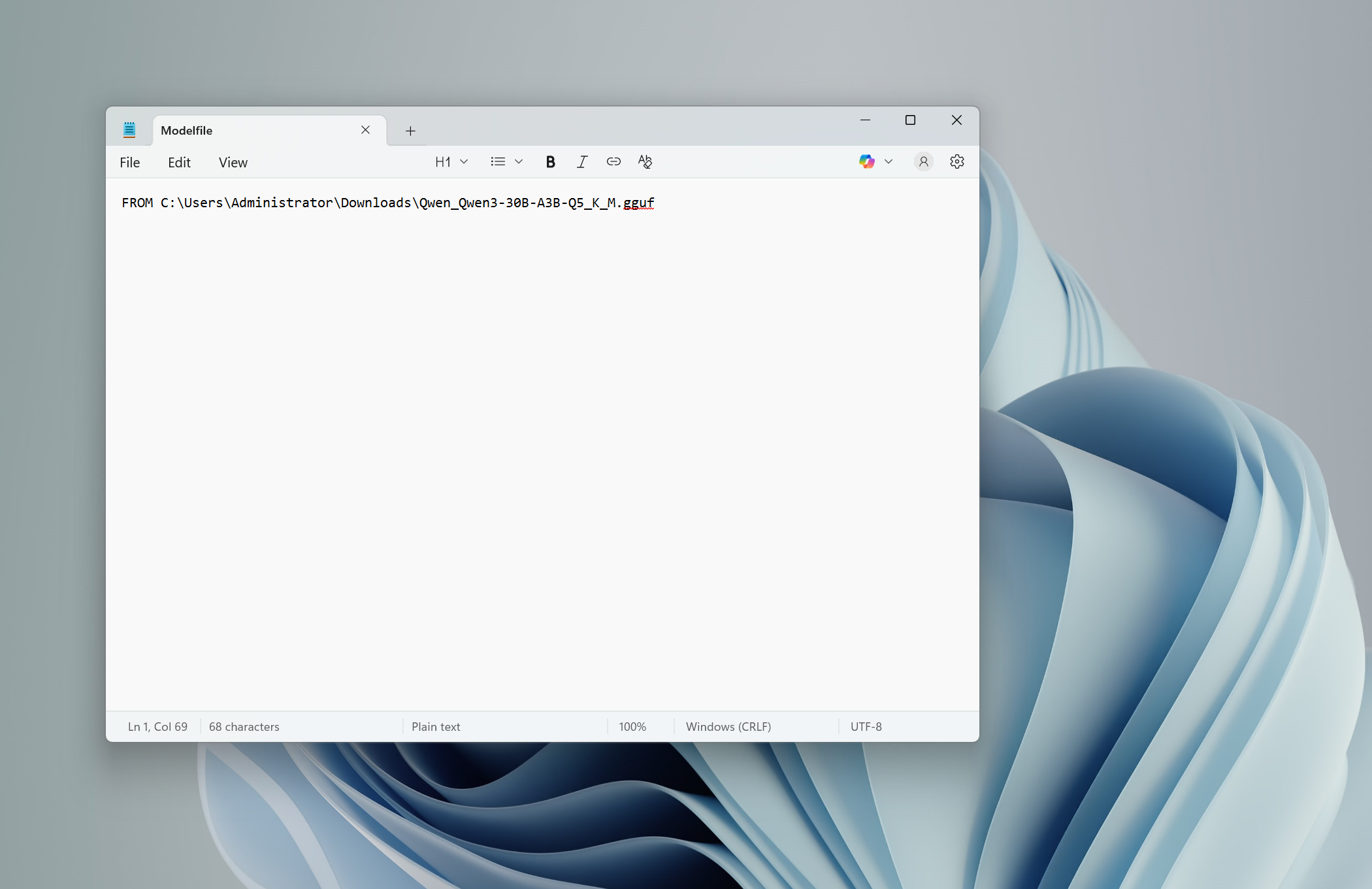

4. Make the Modelfile with Notepad

A Modelfile is the blueprint for creating and sharing models with Ollama. It serves as a set of instructions that define how a model should behave when you run it. You can create one using a simple text editor like Notepad. Create a file named Modelfile (with no extension), and add the following line to it:

FROM C:\Users\Administrator\Downloads\Qwen_Qwen3-30B-A3B-Q5_K_M.gguf

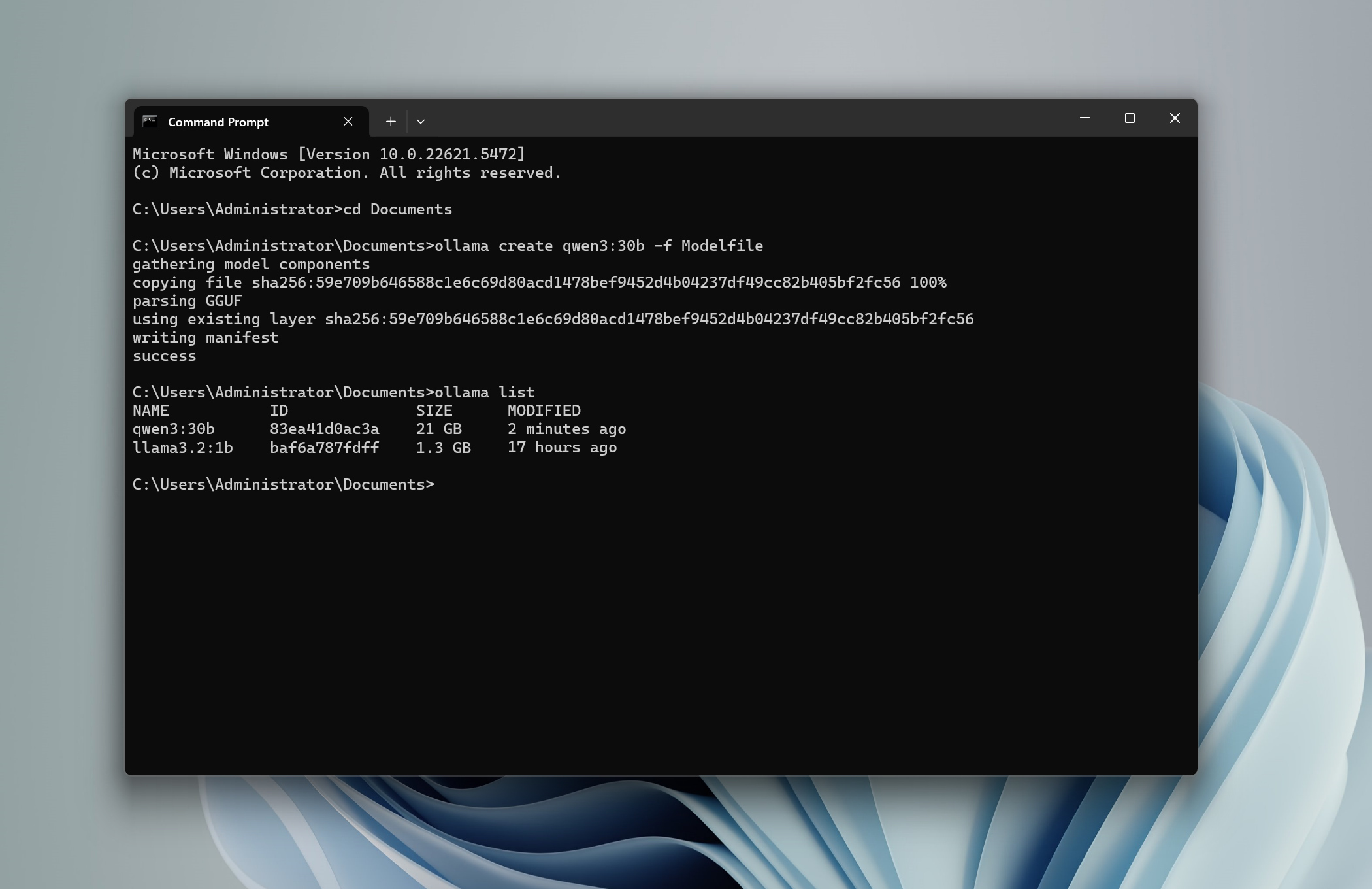

5. Make Qwen3 loadable with Ollama

To import our .gguf model into Ollama, we need to use the ollama create command. This is the command we are gonna use:

ollama create qwen3:30b -f Modelfile

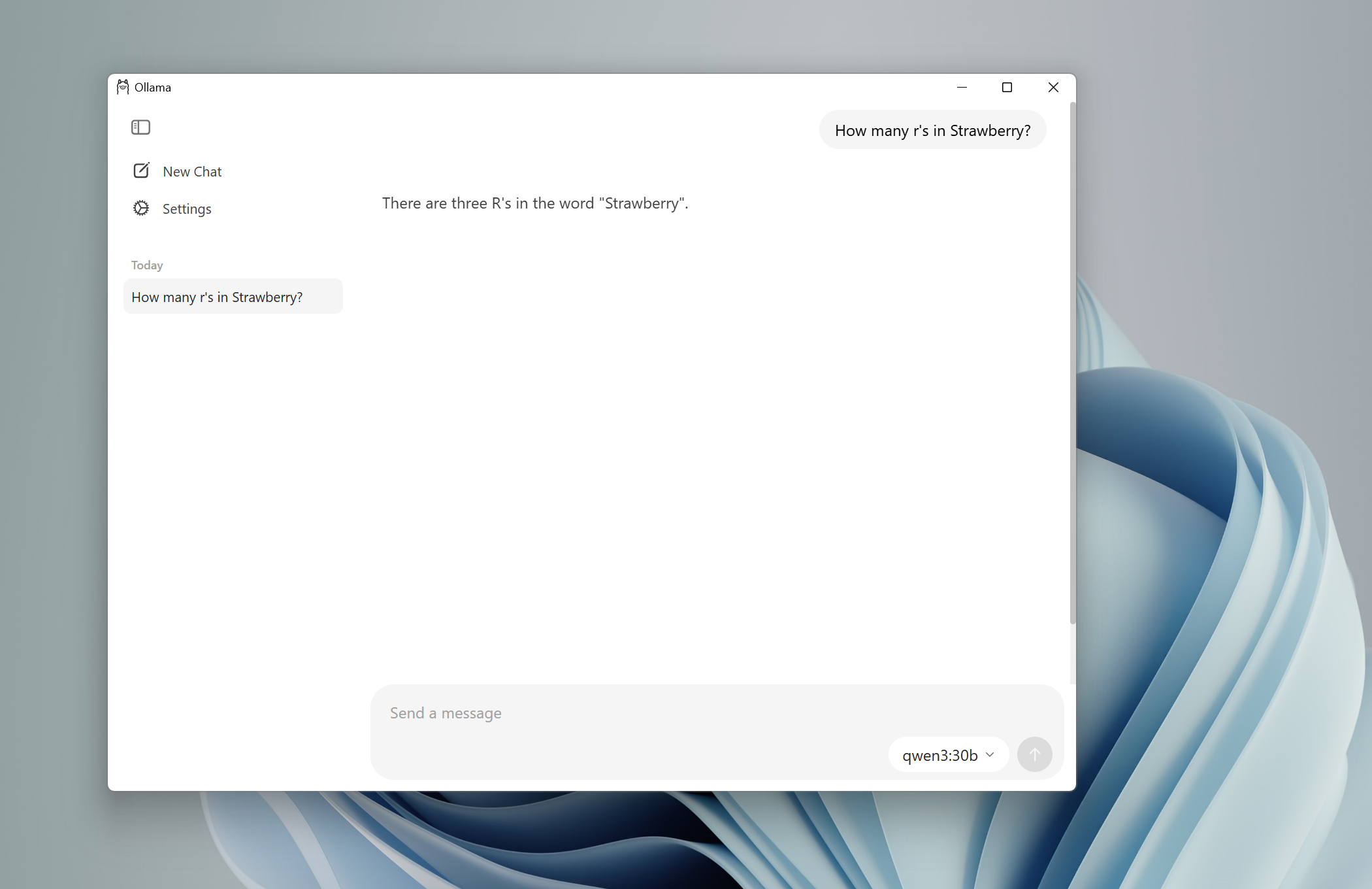

6. Use Qwen3 in Ollama

Finally, just open Ollama's desktop app, select the model, and start chatting.